How AI is Powering the Future of Predictive Maintenance

2025-06-17A Breakthrough in Agile Perception: The Design Behind SYNCROBOTIC’s Lifting-Head Quadruped Robot

As quadruped robots (often referred to as “robot dogs”) expand into diverse industrial applications, equipping them with a head structure that is human-like, task-oriented, and perception-flexible has become a key challenge in system integration. This blog post unveils the design philosophy and technical insight behind SYNCROBOTIC’s movable head module — a breakthrough that enables robots to look up, significantly enhancing their capabilities across inspection, security, disaster response, and human interaction scenarios.

1. Why Should a Robot Dog Be Able to Look Up?

While quadruped robots excel at traversing rugged terrain, their naturally low height and fixed forward-facing cameras create blind spots — particularly when executing tasks like facial recognition, inspecting elevated structures, or monitoring heat sources above eye level. This limitation reduces both sensing efficiency and recognition accuracy.

To solve this, we designed a tilt-enabled head module with an adjustable pitch angle ranging from 0° to 90°, mimicking the head-raising motion of biological animals. This expanded field of view not only improves task performance but also lays the groundwork for natural human-robot interaction — like making eye contact or following a person’s gaze.

2. Structural Innovation: Modular Head with Single-Axis Servo Drive

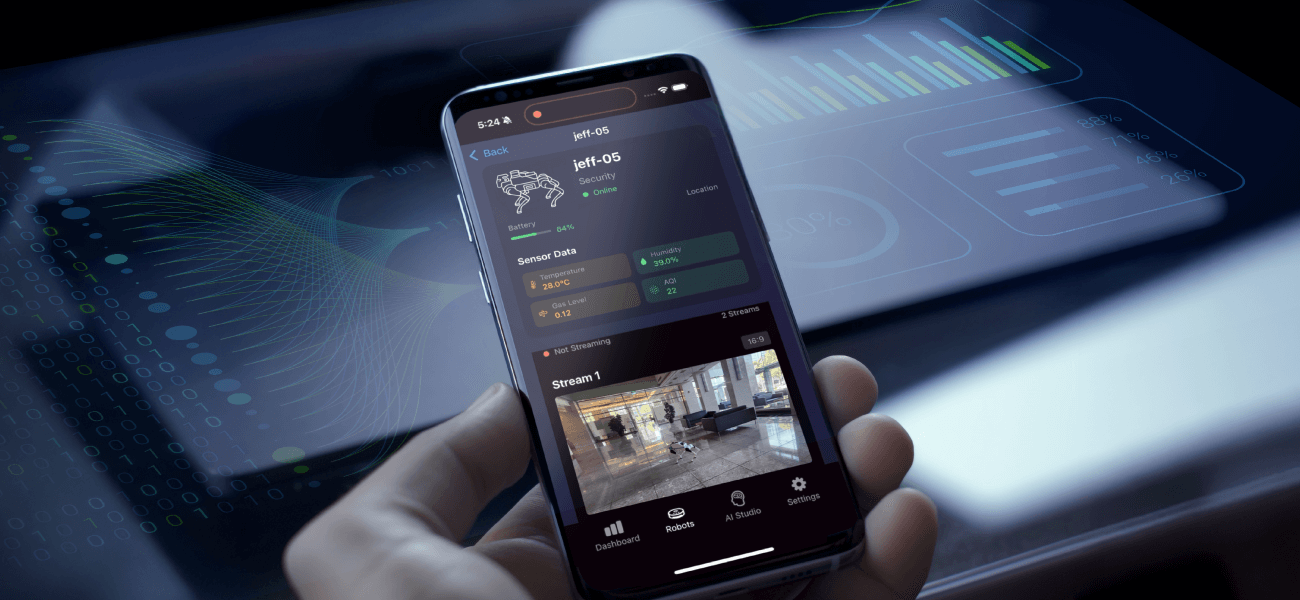

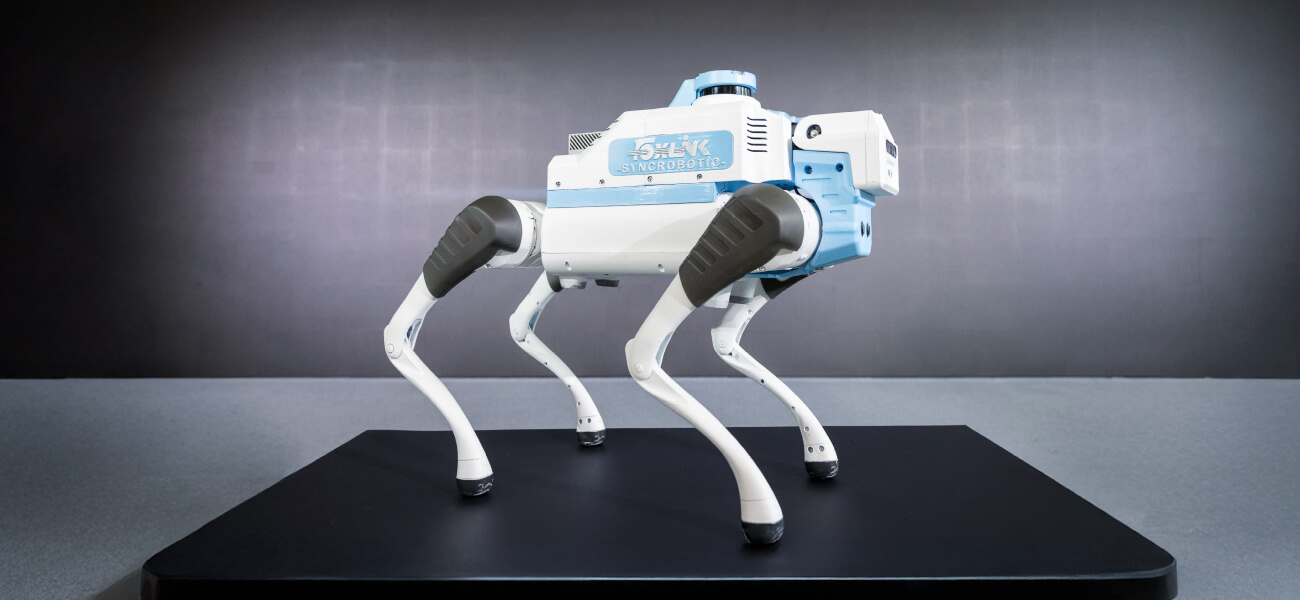

Our robot’s head module (as shown in Figures 1 and 2) is fully modular and mounted onto the standard robot chassis. It is driven by a high-torque precision servo motor, which enables vertical tilt through a single-axis rotation mechanism.

Figure 1. Full-body view of the SYNCROBOTIC quadruped robot

Figure 2. Head-lifting view of the SYNCROBOTIC quadruped robot

To maintain the stability and reliability, our design considerations include:

- Torque Optimization: High-torque, low-backlash servos prevent wobble during movement or impact.

- Cable Routing & Rotation Safety: Internal channels and mechanical stops in the rotating axis protect wires from twisting or abrasion.

- Quick-Swap Mounting: A modular latch mechanism allows for easy maintenance or sensor upgrades.

3. Multimodal Perception Integration: Depth, Thermal, and RGB Vision

The head module (Figures 3 and 4) integrates three primary sensing technologies:

- Depth Cameras (Stereo / ToF): Provide 3D spatial mapping and obstacle avoidance capabilities.

- Thermal Imaging (Infrared Cameras): Detect abnormal heat sources for tasks like equipment monitoring or fire detection.

- RGB Cameras: Enable facial recognition, object classification, and general visual guidance.

These sensors are synchronized and physically integrated to achieve real-time sensor fusion, significantly enhancing perception reliability — particularly in challenging environments such as low light, outdoor glare, or subtle temperature differences.

Figure 3. The quadruped robot’s depth camera, thermal imager, and IP camera

Figure 4. Perspective view of the quadruped robot’s head

4. Key Use Cases: Enabling Task Intelligence and HRI

This active head module is more than a perception upgrade — it is a foundation for Task Intelligence and Human-Robot Interaction (HRI). Here are some real-world scenarios it supports:

- Factory Inspection: Detects anomalies in elevated switchboards or compressor heat levels.

- Disaster Response: Locates heat signatures from trapped victims or ignition points under rubble.

- Security Patrol: Locks onto and tracks human faces or suspicious behavior via adjustable angles.

- Reception & Guidance: Simulates eye contact with users to increase social presence and interaction quality.

5. Looking Ahead: Toward Full-Spectrum Vision and Interactive AI

Our lifting-head module is built with extensibility in mind. Future developments will explore:

- Dual-Axis Design (Pitch + Yaw): For full-range panoramic perception.

- Sound Source Localization: To support gaze-following and directional awareness.

- AI-Driven Head Attention: Leveraging neural models to dynamically orient the head based on situational context and interactive cues.

This design is now part of our standard SYNCROBOTIC Dog Platform, aimed at advancing edge-AI and mission-centric robotics through continued industry collaboration and real-world deployment.